It was bound to come up sooner or later, and I even surprised myself by optimistically leaving of my list of rants and potential future blogs. But really can commercial simulation really predict the effect of press speed variation on the forming of parts?

- yes and no (or more appropo no, but kind of)

Funny enough this has been brought up in several contexts recently. 1st by a colleague who just addressed the issue with a customer for tech support, 2nd a colleague who ran into this question during training answered it correctly only to have another more technically minded person give a roundabout not quite correct interpretation, and 3rd in a conversation with a thrid party who was relating stories of how our competition answered this question.

To break it down fact by fact:

- commercial simulation codes assume a single hardening curve for the entire simulation

- most material properties reported by steel vendors will also assume a constant and singular strain rate

- mild steel has a positive strain rate (which means it stretches better fast than slow)

- many steel parts will split if run fast in the press (and may form better in inch modes or slower press speeds)

The top two facts go to the point that most of the time when people run a stamping simulation they will not see any effect of press speed, and therefore the strain rate will not be a consideration. For example, if I run a simulation at a press speed of 1mm/sec vs 1000 mm/sec the stretching in the simulated die will show no effect (OK, if you are running a dynamic explicit solver you will see something different happen, but that would be a result of inertial effects and not a material performance issue). The rules that govern how the material is formed will refer to the hardening curve (stress/strain curve) of the material to determine how the material responds to deformation. However, since only one curve is provided there can be only one answer.

The next two facts are more interesting as they lead to people thinking I have mispoken myself and contradicted the 3rd statement with the 4th. But in fact both statements are correct. Mild steel has a POSITIVE STRAIN RATE, which means that during successive tests of the same heat of steel at different speeds of deformation, the steel will show a higher resistance to strain (deformation) when pulled faster. If the steel demonstrates this increased resistance to deformation as speed increases it means that the material will have more uniform distribution of stretching when pulled fast, and therefore would perform better fast than slow (less likely to fracture, which is a sign of non-uniform deformation).

Brief aside (that should be a footnote, but I don’t know how to do that here):

This is entirely different than the explanation given by some “dieology” gurus, or other self-proclaimed-die-experts, that steel is like silly putty. Silly putty as you might know is a material that when you stretch it slowly it stretches very far without breaking, and when strecthed fast seems to fracture quickly. BUT this is a major mistake, this is the typical thought by those who confuse formability with ductility. The slow stretching of silly putty starts a cycle of non-uniform deformation that we in stamping would consider a fracture/smile/neck. All that deformation of silly putty at slow speeds is the part failing, getting weaker and weaker because we stretched it slow. On the other hand if you attempt to stretch Silly Putty fast it fractures with almost no necking. They offer this a an explanation as to which some parts only make when the press is run slow, but that has nothing to do with the behavior that is observed. SORRY

So does steel like being deformed SLOW more than fast? NOPE. Steel has a positive strain rate. Attempts to deform it fast result in the steel stepping up and acting stronger in the deformed area to prevent the onset of localized necking. This is GOOD, So stamping steel parts fast should be good too. In fact, if we did go so far as to load a simulation code with the appropriate steel strain rate curves, and run simulations at various speeds we would see that the faster deforming simulations would show better distribution of thinning, more uniform deformation, and therefore better forming. Again SORRY.

Another disappointing revelation. Strain rate sesitivity manifests itself only at greatly varied speeds (i.e. 10m/s to 100m/s to 1000m/s) bu the effects at relatively modest changes say 0.25m/s vs 0.35m/s won’t be noticable. Now consider, just how fast does the ram of your press go, and how much faster will it go if the cycle rate doubles. If you said double, SORRY try again. Press ram velocity for mechanical presses, will vary throughout the stroke. The ram has no velicty at the top and bottom of stroke (where it reverses direction) and will move fastest at midstroke. In most stamping operations the work of the press is done in the bottom few inches (mm) of the press stroke (usually not more than a few degrees of crank angle (maybe 15-20) and therefore will not be near the area in the stroke when a doubling of press cycle time = double ram velocity.

doing the math for a press with a 1 meter stroke at 10 spm to 20 spm. I’ll even spot you average press speed. at a rate of 10spm the ram covers 2m each 6 seconds (or 0.33 m/s). When we double the cycle rate to 20spm thats 2m each 3 seconds (0.66m/s). You would be hard pressed (no pun intended) to find data on the variation in strain rate sensitity for such a low strain rate variation.

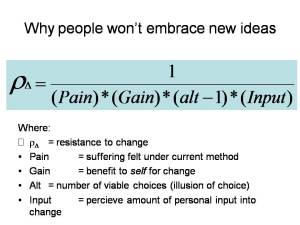

So why do my dies produce splits more readily at these high cycle times?

In the above question you find the answer. It may be the dies, not the steel.

Your dies don’t behave well at higher speeds than they do at slower speeds. Sorry to say that everybodies favorite scapegoat: the material. might not have anything to do with this problem. More likely your press and die are not behaving in a consistent manner from fast to slow:

- press alignment is more likely when we cycle slowly, at high rates the press can remain crooked and unevenly distribute forming pressure

- Die alignment is better slow than fast

- Your lube might work better at slow sliding velocity than fast

- The die doesn’t dissipate heat as well when cycle time is fast

- you pressure system (nitro springs, etc) might show speed sensitivity

- Your part location system might be unstable at high speeds

- Your automation might locating the part different at higher rates (especially pneumatic systems which can’t drop as fast as we like just cause the press is running faster)

- trapped air under the part or in die can affect forming more in fast speed than slow (venting issues)

just to name a few that come to me at the top of my head.

Can simulation give me any indication?

indication yes. but not a direct answer.

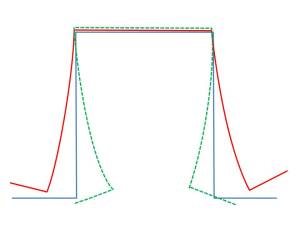

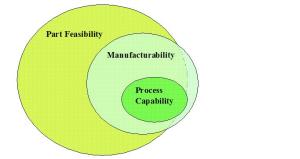

If we run a system of simulation runs (stoichastically) allowing for slight variations in binder pressure, friction, Lube, bead effect, blank location, material variation, and thickness variation. We can discover if a process is prone to variation in results for these changes. If a process is fully insensitive to variations due to the changes then we know that the process should produce favorable results at nearly any speed. But if the process shows that results are highly variable (goes for safe to splitting) for minor changes in bead effect, or blank location, or lube (friction) then we can recognize that the process will not be robust, and could be vulnerable to variation if something like press speed were varied.

Filed under: engineering | Tagged: CAE, engineering, FEA, finite element analysis, press speed, Sheet Metal forming, stamping, steel | 1 Comment »